用Jenkins动态伸缩和Kubernetes为应用构建CI/CD 自动化流程

本博客将会利用jenkins pipeline实现对于存储在Gitlab上的代码在kubernetes平台之上的CI/CD管道,其中会以云原生的方式,将jenkins master,jenkins slave全部部署于kubernetes之上,从而打造一个高可用,弹性伸缩的CI/CD管道 。

项目架构

- 推送代码到托管镜像仓库

- //Github 基于webhook触发jenkins pipeline项目

- Jenkins master分配kubernetes slave作为项目的执行环境,同时k8s启动slave pod

- Jenkins slave pod运行pipeline中指定的任务第一步从私有代码仓库拉下代码

- Jenkins slave pod执行代码测试,测试完毕后依据代码仓库格式,构造镜像

- Jenkins slave pod推送镜像到Harbor上

- Jenkins slave pod执行应用服务的更新任务

- 应用服务pod所在节点拉取相应的镜像,完成镜像的替换,即应用的更新

安装插件:

Kubernetes Cli Plugin

Kubernetes Plugin

Subversion Plugin

git Plugin

Maven Integration Plugin

Pipeline

Open Blue OceanKubernetes 集群上动态配置Jenkins代理好处:

Kubernetes插件的目的是能够使用Kubernetes集群动态配置Jenkins

slave (使用Kubernetes调度机制来优化负载),运行单个构建,然后

注销并删除容器 slave 。

服务高可用:当 Jenkins Master 出现故障时,Kubernetes 会自动创建一个新的 Jenkins Master 容器,并且将 Volume 分配给新创建的容器,保证数据不丢失,从而达到集群服务高可用。

动态伸缩:合理使用资源,每次运行 Job 时,会自动创建一个 Jenkins Slave,Job 完成后,Slave 自动注销并删除容器,资源自动释放,而且 Kubernetes 会根据每个资源的使用情况,动态分配 Slave 到空闲的节点上创建,降低出现因某节点资源利用率高,还排队等待在该节点的情况。

扩展性好:当 Kubernetes 集群的资源严重不足而导致 Job 排队等待时,可以很容易的添加一个 Kubernetes Node 到集群中,从而实现扩展。

kubernetes上面jenkins master 部署

创建namespaces 创建jenkins RBAC

apiVersion: v1

kind: Namespace

metadata:

name: jenkins

---

apiVersion: v1

kind: ServiceAccount

metadata:

labels:

k8s-app: jenkins

name: jenkins-admin

namespace: jenkins

---

apiVersion: rbac.authorization.k8s.io/v1beta1

kind: ClusterRoleBinding

metadata:

name: jenkins-admin

namespace: jenkins

labels:

k8s-app: jenkins

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: cluster-admin

subjects:

- kind: ServiceAccount

name: jenkins-admin

namespace: jenkins

创建jenkin deployment

apiVersion: apps/v1beta1

kind: Deployment

metadata:

name: jenkins

namespace: jenkins

spec:

replicas: 1

strategy:

type: RollingUpdate

rollingUpdate:

maxSurge: 2

maxUnavailable: 0

template:

metadata:

labels:

app: jenkins

spec:

securityContext:

fsGroup: 1000

serviceAccount: "jenkins-admin"

containers:

- name: jenkins

image: 192.168.19.111/gc/jenkins:latest

imagePullPolicy: IfNotPresent

ports:

- containerPort: 8080

name: web

protocol: TCP

- containerPort: 50000

name: agent

protocol: TCP

volumeMounts:

- name: jenkinshome

mountPath: /var/jenkins_home

env:

- name: JAVA_OPTS

value: "-Xms16G -Xmx16G -XX:PermSize=512m -XX:MaxPermSize=1024m -Duser.timezone=Asia/Shanghai"

- name: TRY_UPGRADE_IF_NO_MARKER

value: "true"

volumes:

- name: jenkinshome

nfs:

server: 192.168.7.206

path: "/opt/jenkins-test"

---

kind: Service

apiVersion: v1

metadata:

labels:

app: jenkins

name: jenkins

namespace: jenkins

spec:

ports:

- port: 8080

targetPort: 8080

name: web

- port: 50000

targetPort: 50000

name: agent

selector:

app: jenkins

---

apiVersion: extensions/v1beta1

kind: Ingress

metadata:

name: jenkins

namespace: jenkins

spec:

rules:

- host: jenkins.test.lan

http:

paths:

- path: /

backend:

serviceName: jenkins

servicePort: 8080所需要的yam放在Github:

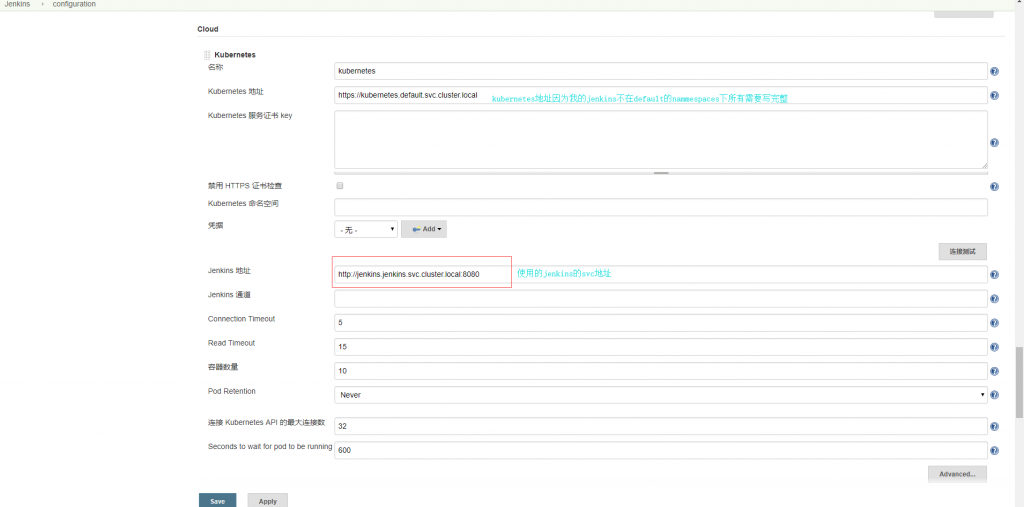

配置Kubernetes插

https://github.com/jenkinsci/kubernetes-plugin/blob/master/README.md

构建的镜像,设想一下,如果这个应用需要编译,需要测试,那么这个时间就长了,而且如果都在本地构建的话,一个人使用还好,如果多个人一起构建,就会造成拥塞。

为了解决上述问题,我们可以充分利用k8s的容器编排功能,jenkins接收到任务后,调用k8s api,创造新的 agent pod,将任务分发给这些agent pod,agent pod执行任务,任务完成后将结果汇总给jenkins pod,同时删除完成任务的agent pod。

为了实现上述功能,我们需要给jenkins安装一个插件,叫做jenkins kubernetes plugin

安装完插件,点击 “系统管理” —> “系统设置” —> “新增一个云” —> 选择 “Kubernetes”,然后填写 Kubernetes 和 Jenkins 配置信息。 除了必须填的其他可以根据自己的需求 如namespaces

创建应该Pipeline动态构建测试

def label = "mypod-${UUID.randomUUID().toString()}"

podTemplate(label: label, cloud: 'kubernetes') {

node(label) {

stage('Run shell') {

sh 'sleep 10s'

sh 'echo hello world.'

}

}

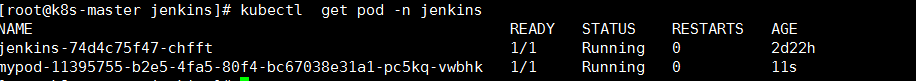

}可以看到在jenkins的namespaces下自动创建了对应的agent pod 相当于就是一个jenkins 的node 当任务执行完成这个pod会自动退出j这个pod默认会去pull一个jenkins/jnlp-slave:alpine的镜像

podTemplate(label: 'pod-golang',

containers: [

containerTemplate(

name: 'golang',

image: 'golang',

ttyEnabled: true,

command: 'cat'

)

]

) {

node ('pod-golang') {

stage 'Switch to Utility Container'

container('golang') {

sh ("go version")

}

}

}以上podTemplate是在Pipeline里面定义的

*注意name必须jnlp,如果不为jnlp pod会启两个容器

name必须jnlp Kubernetes 才能用自定义 images 指定的镜像替换默认的 jenkinsci/jnlp-slave 镜像

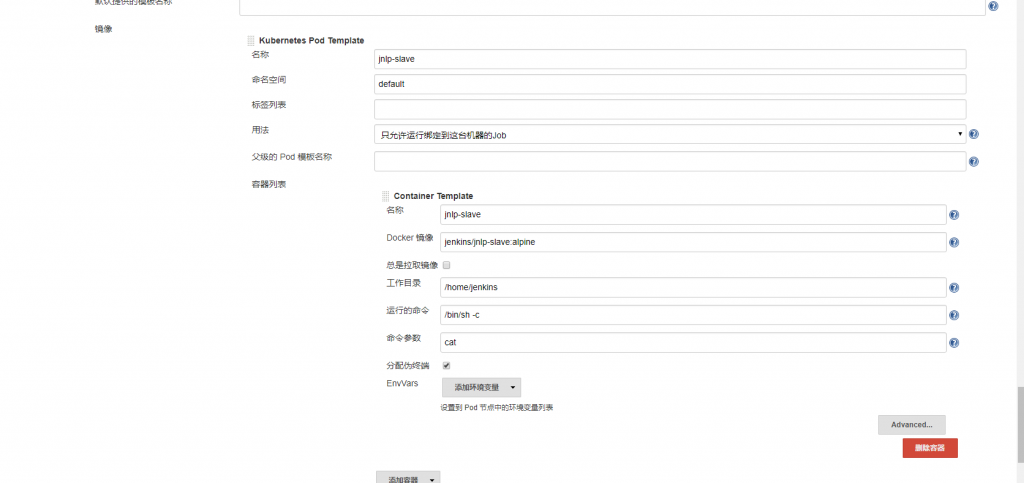

在非Pipeline中定义kubernetes pod tempalte 配置

有时候可能我们需求不使用 Pipeline 或者jenkinsfile。但是我们创建应该自定义风格项目需要指定在运行在pod tempalte上面那然后指定呢?

添加一个kubernetes pod tempalte

创建 一个自定义风格项目

在Kubernetes集群内部,Kubernetes映射/var/run/docker.sock(因此我们共享相同的Docker sock)。 将jenkins用户添加到默认docker用户组下, 从而保证jenkins可以直接访问/var/run/docker.sock

# usermod -a -G docker jenkins

kubernetes pod tempalte就是创建 agent使用的模板,镜像使用“jenkins/jnlp-slave:alpine”(可以自定义镜像),配置完成后,点击保存。

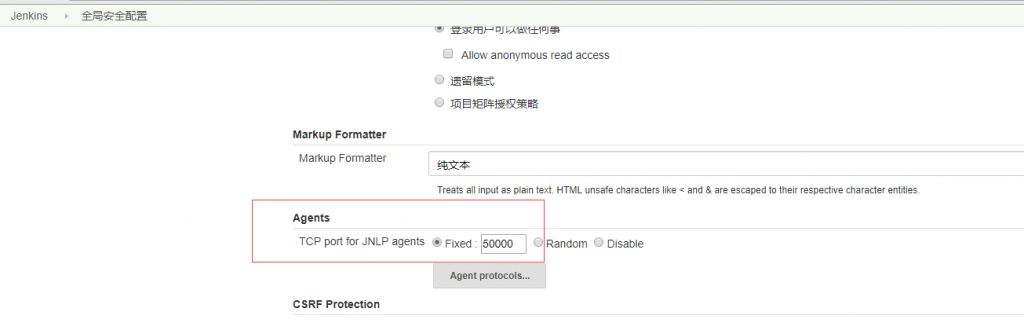

在系统管理—->Configure Global Security,指定端口为我们之前设定的5000 agent与jenkins通信的端口

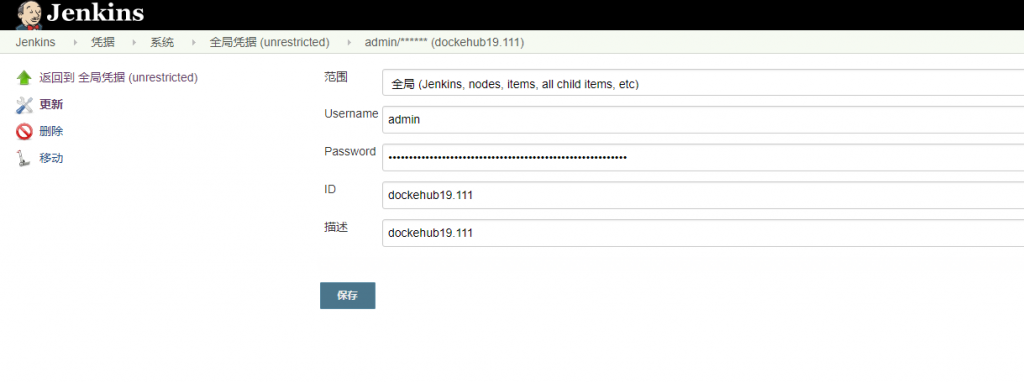

配置github、dockerhub、kubernetes的Jenkins账户凭证, 后面pipeline对应模块需要调用.

kubernetes cli插件

上面执行kubectl部分已经用到这个功能

这个插件功能只要在pod里面执行kubectl

生成kubernetes凭证

# Create a ServiceAccount named `jenkins-robot` in a given namespace.

$ kubectl -n <namespace> create serviceaccount jenkins-robot

# The next line gives `jenkins-robot` administator permissions for this namespace.

# * You can make it an admin over all namespaces by creating a `ClusterRoleBinding` instead of a `RoleBinding`.

# * You can also give it different permissions by binding it to a different `(Cluster)Role`.

$ kubectl -n <namespace> create rolebinding jenkins-robot-binding --clusterrole=cluster-admin --serviceaccount=jenkins-robot

# Get the name of the token that was automatically generated for the ServiceAccount `jenkins-robot`.

$ kubectl -n <namespace> get serviceaccount jenkins-robot -o go-template --template='{{range .secrets}}{{.name}}{{"\n"}}{{end}}'

jenkins-robot-token-d6d8z

# Retrieve the token and decode it using base64.

$ kubectl -n <namespace> get secrets jenkins-robot-token-d6d8z -o go-template --template '{{index .data "token"}}' | base64 -D

eyJhbGciOiJSUzI1NiIsImtpZCI6IiJ9.eyJpc3MiOiJrdWJlcm5ldGVzL3NlcnZpY2V[...]创建直接的jnlp镜像在里面自定义安装好工具如kubectl mvn gti等

可以参考jenkinsci/jnlp-slave(https://github.com/jenkinsci/docker-jnlp-slave)的制作,来做自己的slave节点镜像。

jenkinsci/jnlp-slave镜像是以jenkinsci/slave (https://github.com/jenkinsci/docker-slave)为基础镜像制作的

可以参考jenkinsci/jnlp-slave(https://github.com/jenkinsci/docker-jnlp-slave)的制作,来做自己的slave节点镜像。

dockerfile

FROM jenkinsci/jnlp-slave:latest

COPY kubectl /usr/bin/kubectl

ENTRYPOINT ["jenkins-slave"]处理unix /var/run/docker.sock: connect: permission denied报错

FROM jenkins/jnlp-slave:latest

USER root

RUN echo "${TIMEZONE}" > /etc/timezone \

&& echo "$LANG UTF-8" > /etc/locale.gen \

&& apt-get update -q \

&& ln -sf /usr/share/zoneinfo/${TIMEZONE} /etc/localtime

COPY kubectl /usr/bin/kubectl

COPY docker /usr/bin/docker

RUN DEBIAN_FRONTEND=noninteractive apt-get install -yq curl apt-utils dialog locales apt-transport-https build-essential bzip2 ca-certificates sudo jq unzip zip gnupg2 software-properties-common \

&& update-locale LANG=$LANG \

&& locale-gen $LANG \

&& DEBIAN_FRONTEND=noninteractive dpkg-reconfigure locales \

&&curl -fsSL https://download.docker.com/linux/$(. /etc/os-release; echo "$ID")/gpg |apt-key add - \

&& add-apt-repository "deb [arch=amd64] https://download.docker.com/linux/$(. /etc/os-release; echo "$ID") $(lsb_release -cs) stable" \

&& apt-get update -y \

&& apt-get install -y docker-ce=17.09.1~ce-0~debian \

&& rm -rf /var/lib/apt/lists/* /tmp/* /var/tmp/* \

&& usermod -a -G docker jenkins \

&& sed -i '/^root/a\jenkins ALL=(ALL:ALL) NOPASSWD:ALL' /etc/sudoers

ENTRYPOINT ["jenkins-slave"]

jenkins添加kubernetes凭证

上面部署jenkins角色时候已经是clustre-admin了

现在只要执行下面命令获取secrets

kubectl -n jenkins get secrets jenkins-admin-token-h649j -o go-template --template '{{index .data "token"}}' | base64 -d然后在jenkins界面添加凭证

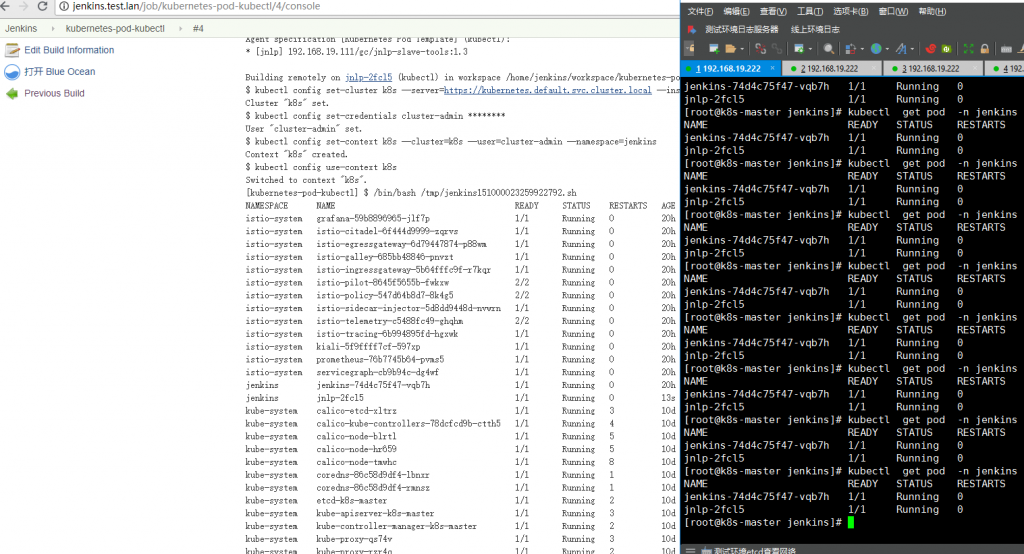

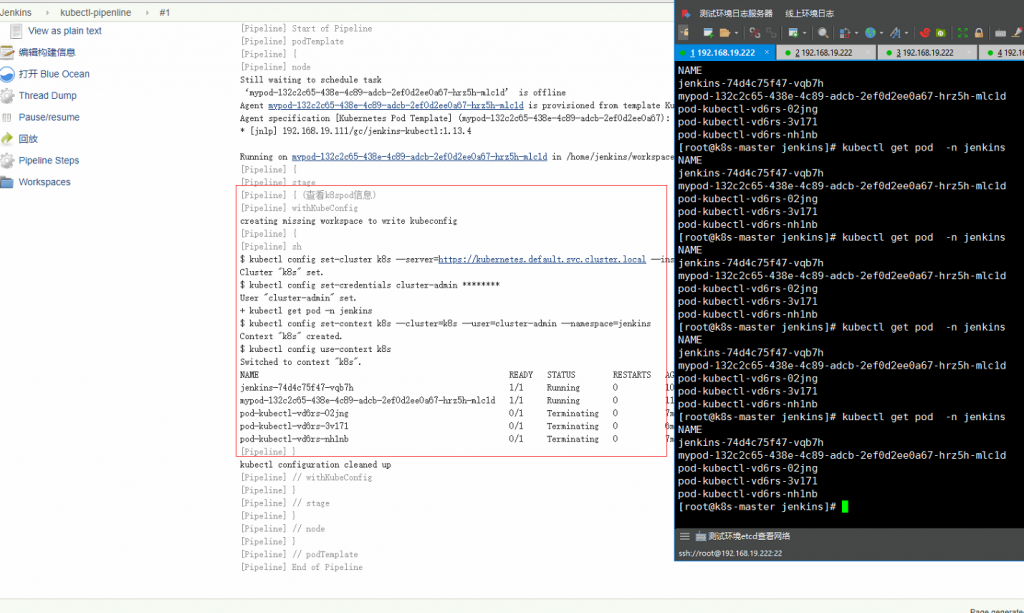

再次创建一个Pipeline

def label = "mypod-${UUID.randomUUID().toString()}"

podTemplate(label: label, cloud: 'kubernetes',containers: [

containerTemplate(

name: 'jnlp',

image: '192.168.19.111/gc/jenkins-kubectl:1.13.4',

alwaysPullImage: false,

args: '${computer.jnlpmac} ${computer.name}')

]

)

{node(label) { //测试在kubernetes执行kubectl

stage('查看k8spod信息'){

withKubeConfig(caCertificate: '', clusterName: '', contextName: '', credentialsId: 'jenkins-secrets', namespace: 'jenkins', serverUrl: 'https://kubernetes.default.svc.cluster.local') {

sh 'kubectl get pod -n jenkins'

}

}

}语法可以点击pipeline-syntax生成

以下示例将创建一个完整CI/CD流水线 :拉取代码–>mvn打包->-docker build–>部署k8s

def label = "my-cicd-demo"

podTemplate(label: label,cloud: 'kubernetes',

containers: [

containerTemplate(

name: 'jnlp',

image: '192.168.19.111/gc/jnlp-slave-tools:1.3',

ttyEnabled: true,

args: '${computer.jnlpmac} ${computer.name}'),],

volumes: [hostPathVolume(mountPath: '/var/run/docker.sock',hostPath: '/var/run/docker.sock'),hostPathVolume(mountPath: '/home/jenkins/.m2',hostPath: '/home/jenkins/.m2'),hostPathVolume(mountPath: '/etc/localtime',hostPath: '/usr/share/zoneinfo/Asia/Shanghai')])

{node(label){

//Define all variables

def project = 'my-project'

def appName = 'springboot-cicd-demo'

def serviceName = "${appName}"

def imageVersion = 'v1'

def namespace = 'default'

def feSvcName = "${appName}"

def imageTag = "192.168.19.111/${project}/${appName}:${imageVersion}"

//Stage 1 : 检出代码

stage('SVN checkout')

//checkout([$class: 'SubversionSCM', additionalCredentials: [], excludedCommitMessages: '', excludedRegions: '', excludedRevprop: '', excludedUsers: '', filterChangelog: false, ignoreDirPropChanges: false, includedRegions: '', locations: [[cancelProcessOnExternalsFail: true, credentialsId: 'yanwei-svn', depthOption: 'infinity', ignoreExternalsOption: true, local: '.', remote: 'http://192.168.7.3/svn/zhph_operation/tags/V6.0.0.0305'], [cancelProcessOnExternalsFail: true, credentialsId: 'yanwei-svn', depthOption: 'infinity', ignoreExternalsOption: true, local: './buildimage', remote: 'http://192.168.7.3/svn/zhph_release/trunk/cicd-demo']], quietOperation: true, workspaceUpdater: [$class: 'CheckoutUpdater']])

checkout([$class: 'SubversionSCM', additionalCredentials: [], excludedCommitMessages: '', excludedRegions: '', excludedRevprop: '', excludedUsers: '', filterChangelog: false, ignoreDirPropChanges: false, includedRegions: '', locations: [[cancelProcessOnExternalsFail: true, credentialsId: 'yanwei-svn', depthOption: 'infinity', ignoreExternalsOption: true, local: '.', remote: 'http://192.168.7.3/svn/zhph_release/trunk/springboot-cicd-demo']], quietOperation: true, workspaceUpdater: [$class: 'UpdateUpdater']])

//Stage 2 : mvn编译war/jar包

stage('mvn Build'){

sh 'id'

sh 'mvn clean install'

}

//Stage 3 : Build the docker image.

stage('docker build push'){//自定义仓库配置编译镜像推送到仓库

//sh 'mv ${WORKSPACE}/target/helloworld-0.0.1-SNAPSHOT.jar .'

withDockerRegistry(credentialsId: 'dockerhub19111', url: 'http://192.168.19.111'){

def customImage = docker.build("${imageTag}", '.')

customImage.push()

//sh 'docker inspect -f {{.Id}} "${imageTag}"'

}}

//Stage 4 : 部署应用

stage('Deploy Application') {

switch (namespace) {

//Roll out to Dev Environment

case "development":

// Create namespace if it doesn't exist

withKubeConfig(caCertificate: '', clusterName: '', contextName: '', credentialsId: 'jenkins-secrets', namespace: 'jenkins', serverUrl: 'https://kubernetes.default.svc.cluster.local') {

sh("kubectl get ns ${namespace} || kubectl create ns ${namespace}")

//Update the imagetag to the latest version

sh("sed -i.bak 's#192.168.19.111/${project}/${appName}:${imageVersion}#${imageTag}#' k8s/development/*.yaml")

//Create or update resources

sh("kubectl --namespace=${namespace} apply -f k8s/development/deployment.yaml")

sh("kubectl --namespace=${namespace} apply -f k8s/development/service.yaml")

//Grab the external Ip address of the service

sh("echo http://`kubectl --namespace=${namespace} get service/${feSvcName} --output=json | jq -r '.status.loadBalancer.ingress[0].ip'` > ${feSvcName}")

break}

//Roll out to Dev Environment

case "production":

// Create namespace if it doesn't exist

withKubeConfig(caCertificate: '', clusterName: '', contextName: '', credentialsId: 'jenkins-secrets', namespace: 'jenkins', serverUrl: 'https://kubernetes.default.svc.cluster.local') {

sh("kubectl get ns ${namespace} || kubectl create ns ${namespace}")

//Update the imagetag to the latest version

sh("sed -i.bak 's#192.168.19.111/${project}/${appName}:${imageVersion}#${imageTag}#' k8s/production/*.yaml")

//Create or update resources

sh("kubectl --namespace=${namespace} apply -f k8s/production/deployment.yaml")

sh("kubectl --namespace=${namespace} apply -f k8s/production/service.yaml")

//Grab the external Ip address of the service

sh("echo http://`kubectl --namespace=${namespace} get service/${feSvcName} --output=json | jq -r '.status.loadBalancer.ingress[0].ip'` > ${feSvcName}")

break}

default:

withKubeConfig(caCertificate: '', clusterName: '', contextName: '', credentialsId: 'jenkins-secrets', namespace: 'jenkins', serverUrl: 'https://kubernetes.default.svc.cluster.local') {

sh("kubectl get ns ${namespace} || kubectl create ns ${namespace}")

sh("sed -i.bak 's#192.168.19.111/${project}/${appName}:${imageVersion}#${imageTag}#' k8s/development/*.yaml")

sh("kubectl --namespace=${namespace} apply -f k8s/development/deployment.yaml")

sh("kubectl --namespace=${namespace} apply -f k8s/development/service.yaml")

//sh("echo http://`kubectl --namespace=${namespace} get service/${feSvcName} --output=json | jq -r '.status.loadBalancer.ingress[0].ip'` > ${feSvcName}")

sh ("kubectl get pod --namespace=${namespace} ")}

//break}

}

}

}

}