免翻墙kubeadm1.13.3快速部署kubernetes

节点规划

master 192.168.19.222

node1 192.168.19.223

node2 192.168.19.224

软件版本

操作系统:CentOS Linux release7 4.4.174-1.el7.elrepo.x86_64

Docker版本:18.06.2-ce

kubernetes版本:1.13.3

环境准备

修改主机名

hostnamectl --static set-hostname k8s-master

hostnamectl --static set-hostname node1

hostnamectl --static set-hostname node2

配置SSH免密登录

在master机器上使用ssh-keygen产生公钥私钥

ssh-keygen -t rsa -b 1024

用ssh-copy-id将公钥复制到远程机器中

ssh-copy-id -i ~/.ssh/id_rsa.pub remote-host

关闭所有节点防火墙

[root@k8s-master ~]# service firewalld stop && systemctl disable firewalld关闭所有节点selinux

[root@k8s-master ~]# setenforce 0

[root@k8s-master ~]# sed -i 's/SELINUX=enforcing/SELINUX=disabled/g' /etc/selinux/config 设置所有节点/etc/hosts文件

[root@k8s-master ~]# cat /etc/hosts

127.0.0.1 localhost localhost.localdomain localhost4 localhost4.localdomain4

::1 localhost localhost.localdomain localhost6 localhost6.localdomain6

192.168.19.222 k8s-master

192.168.19.223 node1

192.168.19.224 node2关闭所有节点swap

[root@k8s-master ~]# swapoff -a

[root@k8s-master ~]# sed -i '/swap/d' /etc/fstab 所有节点参数设置

[root@k8s-master ~]# cat > /etc/sysctl.d/k8s.conf <<EOF

net.bridge.bridge-nf-call-ip6tables = 1

net.bridge.bridge-nf-call-iptables = 1

net.ipv4.ip_forward = 1

vm.swappiness=0

EOF

[root@k8s-master ~]# modprobe br_netfilter

[root@k8s-master ~]# sysctl -p /etc/sysctl.d/k8s.conf Centos升级内核

#!/bin/bash

# 载入公钥

rpm --import https://www.elrepo.org/RPM-GPG-KEY-elrepo.org

# 安装ELRepo

rpm -Uvh http://www.elrepo.org/elrepo-release-7.0-3.el7.elrepo.noarch.rpm

# 载入elrepo-kernel元数据

yum --disablerepo=\* --enablerepo=elrepo-kernel repolist

# 查看可用的rpm包

echo `yum --disablerepo=\* --enablerepo=elrepo-kernel list kernel*`

# 安装最新版本的kernel

yum --disablerepo=\* --enablerepo=elrepo-kernel install -y kernel-lt.x86_64

# 删除旧版本工具包

yum remove kernel-tools-libs.x86_64 kernel-tools.x86_64 -y

# 安装新版本工具包

yum --disablerepo=\* --enablerepo=elrepo-kernel install -y kernel-lt-tools.x86_64

#查看默认启动顺序

echo `awk -F\' '$1=="menuentry " {print $2}' /etc/grub2.cfg`

#默认启动的顺序是从0开始,新内核是从头插入(目前位置在0,而4.4.4的是在1),所以需要选择0。

grub2-set-default 0

#重启并检查

reboot4.所有节点安装Docker

安装docker的yum源:

[root@k8s-master ~]#yum install -y yum-utils device-mapper-persistent-data lvm2

[root@k8s-master ~]#yum-config-manager \

--add-repo \

https://download.docker.com/linux/centos/docker-ce.repo

查看最新的Docker版本:

[root@k8s-master ~]# yum list docker-ce.x86_64 --showduplicates |sort -r 已加载插件:fastestmirror 已安装的软件包 可安装的软件包 * updates: centos.ustc.edu.cn Loading mirror speeds from cached hostfile * extras: centos.ustc.edu.cn * elrepo: mirrors.tuna.tsinghua.edu.cn docker-ce.x86_64 3:18.09.2-3.el7 docker-ce-stable docker-ce.x86_64 3:18.09.1-3.el7 docker-ce-stable docker-ce.x86_64 3:18.09.0-3.el7 docker-ce-stable docker-ce.x86_64 18.06.2.ce-3.el7 docker-ce-stable docker-ce.x86_64 18.06.2.ce-3.el7 @docker-ce-stable docker-ce.x86_64 18.06.1.ce-3.el7 docker-ce-stable docker-ce.x86_64 18.06.0.ce-3.el7 docker-ce-stable docker-ce.x86_64 18.03.1.ce-1.el7.centos docker-ce-stable docker-ce.x86_64 18.03.0.ce-1.el7.centos docker-ce-stable

在各节点安装docker的18.06.2.ce-3.el7版本

[root@k8s-master ~]# yum install -y --setopt=obsoletes=0 \

docker-ce-18.06.2.ce-3.el7

[root@k8s-master ~]# systemctl start docker && systemctl enable docker 配置docker镜像加速

mkdir /etc/docker

cat>/etc/docker/daemon.json<<EOF

{

"registry-mirrors": ["https://registry.docker-cn.com"],

"insecure-registries":["192.168.19.10"],

"log-driver": "json-file",

"log-opts": {

"max-size": "100m",

"max-file": "3"

}

}EOF配置kubernetes阿里源

[root@k8s-master ~]# cat>>/etc/yum.repos.d/kubrenetes.repo<<EOF

[kubernetes]

name=Kubernetes Repo

baseurl=https://mirrors.aliyun.com/kubernetes/yum/repos/kubernetes-el7-x86_64/

gpgcheck=0

gpgkey=https://mirrors.aliyun.com/kubernetes/yum/doc/yum-key.gpg

EOF

*如果使用google镜像站需要翻墙

https://kubernetes.io/docs/setup/independent/install-kubeadm/

cat <<EOF > /etc/yum.repos.d/kubernetes.repo

[kubernetes]

name=Kubernetes

baseurl=https://packages.cloud.google.com/yum/repos/kubernetes-el7-x86_64

enabled=1

gpgcheck=1

repo_gpgcheck=1

gpgkey=https://packages.cloud.google.com/yum/doc/yum-key.gpg https://packages.cloud.google.com/yum/doc/rpm-package-key.gpg

exclude=kube*

EOF

所有节点安装kubelet kubeadm kubectl包

[root@k8s-master ~]# yum install -y kubelet kubeadm kubectl

[root@k8s-master ~]# systemctl enable kubelet && systemctl start kubelet

Docker获取kubernetes组件镜像

cat <<EOF > /tmp/get-images.sh

#!/bin/bash

images=(kube-apiserver:v1.13.3 kube-controller-manager:v1.13.3 kube-scheduler:v1.13.3 kube-proxy:v1.13.3 pause:3.1 etcd:3.2.24)

for imageName in \${images[@]} ; do

docker pull mirrorgooglecontainers/\$imageName

docker tag mirrorgooglecontainers/\$imageName k8s.gcr.io/\$imageName

docker rmi mirrorgooglecontainers/\$imageName

done

EOF

sh /tmp/get-images.sh

docker pull coredns/coredns:1.2.6

docker tag coredns/coredns:1.2.6 k8s.gcr.io/coredns:1.2.6

docker rmi coredns/coredns:1.2.6在Master节点初始化kubernetes集群

[root@k8s-master ]# kubeadm init --kubernetes-version=v1.13.3 --apiserver-advertise-address 192.168.19.222 --pod-network-cidr=10.244.0.0/16

--kubernetes-version: 用于指定 k8s版本

--apiserver-advertise-address:用于指定使用 Master的哪个network interface进行通信,若不指定,则 kubeadm会自动选择具有默认网关的 interface

--pod-network-cidr:用于指定Pod的网络范围。该参数使用依赖于使用的网络方案,本文将使用经典的flannel网络方案。

[root@k8s-master ]# kubeadm init --kubernetes-version=v1.13.3 --apiserver-advertise-address 192.168.19.222 --pod-network-cidr=10.244.0.0/16 ..... Your Kubernetes master has initialized successfully! To start using your cluster, you need to run the following as a regular user: mkdir -p $HOME/.kube sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config sudo chown $(id -u):$(id -g) $HOME/.kube/config You should now deploy a pod network to the cluster. Run "kubectl apply -f [podnetwork].yaml" with one of the options listed at: https://kubernetes.io/docs/concepts/cluster-administration/addons/ You can now join any number of machines by running the following on each node as root: kubeadm join 192.168.19.222:6443 --token glv963.q0y5srs7s7qbna4y --discovery-token-ca-cert-hash sha256:3013d8f7b0cd16f3d3514031b6459851f047e8f0318d84e8515894198986936e

按照提示执行配置kubectl配置

mkdir -p $HOME/.kube

sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

sudo chown $(id -u):$(id -g) $HOME/.kube/config查看一下集群状态

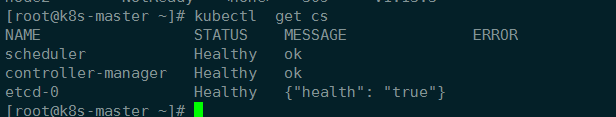

kubectl get cs

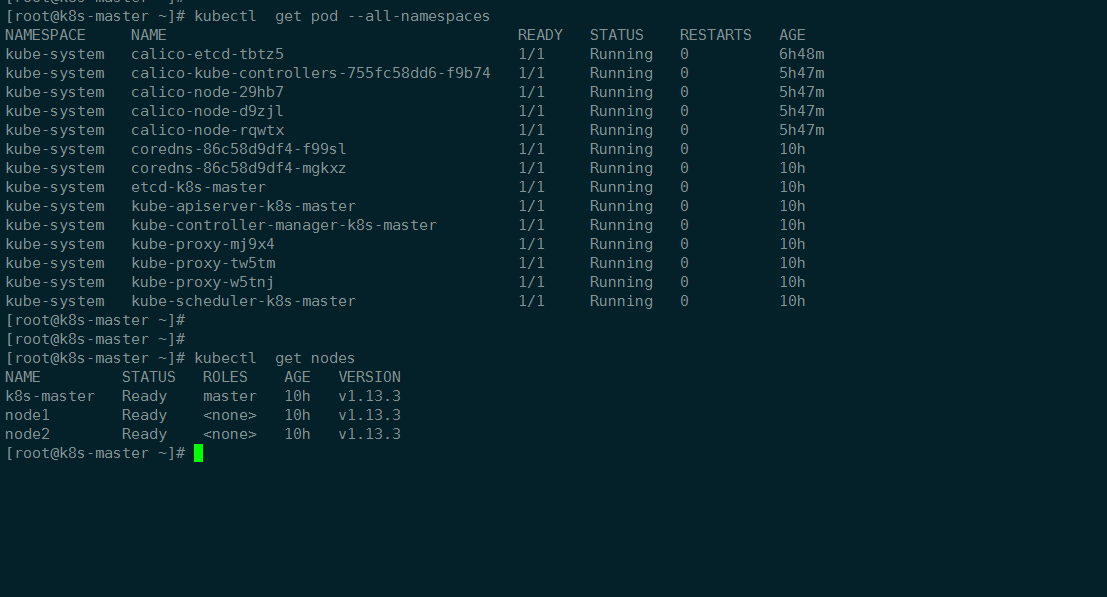

将node1/2节点加入集群,在node1和node22节点执行命令

kubeadm join 192.168.19.222:6443 --token glv963.q0y5srs7s7qbna4y --discovery-token-ca-cert-hash sha256:3013d8f7b0cd16f3d3514031b6459851f047e8f0318d84e8515894198986936e这个时候查看node状态是NotReady,因为没有安装网络创建

配置网络calico3.4

https://docs.projectcalico.org/v3.4/getting-started/kubernetes/

https://docs.projectcalico.org/v3.4/getting-started/kubernetes/installation/calico

https://kubernetes.io/docs/setup/independent/create-cluster-kubeadm/

CNI插件已启用

Calico作为CNI插件安装。必须通过传递–network-plugin=cni参数将kubelet配置为使用CNI网络。(在kubeadm上,这是默认设置。)

使用以下命令安装etcd实例。

kubectl apply -f \

https://docs.projectcalico.org/v3.4/getting-started/kubernetes/installation/hosted/etcd.yaml

您应该看到以下输出。

daemonset.extensions/calico-etcd created

service/calico-etcd created

kubectl get endpoints --all-namespaces下载etcd的Calico网络清单

[root@k8s-master]#curl \

https://docs.projectcalico.org/v3.4/getting-started/kubernetes/installation/hosted/calico.yaml \

-O 由于我的podcidr和官方默认不一样所以需要修改,修改etcdendpoints

POD_CIDR="10.244.0.0/16" \

sed -i -e "s?192.168.0.0/16?$POD_CIDR?g" calico.yaml

kubectl apply -f calico.yamlkubeadm init 并且使用该标志指定的CIDR必须与Calico的IP池匹配。在Calico的清单中配置的默认IP池是192.168.0.0/16

在使用kubeadm部署时,Calico并没有使用kubeadm在Kubernetes master中部署的etcd服务,而是创建了一个Calico自己使用的etcd pod,服务地址为 http://10.96.232.136:6666

kubectl get svc –all-namespaces

NAMESPACE NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

default kubernetes ClusterIP 10.96.0.1 443/TCP 35m

kube-system calico-etcd ClusterIP 10.96.232.136 6666/TCP 24m

查看Pods是否正常

[root@k8s-master ~]

# kubectl get svc –all-namespaces

NAMESPACE NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

default kubernetes ClusterIP 10.96.0.1 443/TCP 35m

kube-system calico-etcd ClusterIP 10.96.232.136 6666/TCP 24m

查看Pods是否正常

[root@k8s-master]# kubectl get pods --all-namespaces

[root@k8s-master]# kubectl get node

到这里kubernetes集群基本就完成了

token使用命令查看(24小时有效)

查看token Master上执行:

[root@k8s-master]# kubeadm token list

重新生成新的token

[root@k8s-master]#kubeadm token create

获取ca证书sha256编码hash值

[root@k8s-master]#openssl x509 -pubkey -in /etc/kubernetes/pki/ca.crt | openssl rsa -pubin -outform der 2>/dev/null | openssl dgst -sha256 -hex | sed 's/^.* //'*可选

kube-proxy开启ipvs

kube-proxy开启ipvs需要加载的内核模块,在所以运行kube-proxy节点执行

cat > /etc/sysconfig/modules/ipvs.modules <<EOF

#!/bin/bash

modprobe -- ip_vs

modprobe -- ip_vs_rr

modprobe -- ip_vs_wrr

modprobe -- ip_vs_sh

modprobe -- nf_conntrack_ipv4

EOF

chmod 755 /etc/sysconfig/modules/ipvs.modules && bash /etc/sysconfig/modules/ipvs.modules && lsmod | grep -e ip_vs -e nf_conntrack_ipv4kubectl edit cm kube-proxy -n kube-system

修改config.conf,mode: “ipvs”

Using ipvs Proxier,说明ipvs模式已经开启

kubectl logs kube-proxy-xxxxx -n kube-system 清理集群

移除node2这个Node执行下面的命令:

master执行:

kubectl drain node2 --delete-local-data --force --ignore-daemonsets

kubectl delete node node2

在node2上面执行:

kubeadm reset

rm -rf /var/lib/cni/安装dashboard

dashboard.yaml

# Copyright 2017 The Kubernetes Authors.

#

# Licensed under the Apache License, Version 2.0 (the "License");

# you may not use this file except in compliance with the License.

# You may obtain a copy of the License at

#

# http://www.apache.org/licenses/LICENSE-2.0

#

# Unless required by applicable law or agreed to in writing, software

# distributed under the License is distributed on an "AS IS" BASIS,

# WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

# See the License for the specific language governing permissions and

# limitations under the License.

# ------------------- Dashboard Secret ------------------- #

apiVersion: v1

kind: Secret

metadata:

labels:

k8s-app: kubernetes-dashboard

name: kubernetes-dashboard-certs

namespace: kube-system

type: Opaque

---

# ------------------- Dashboard Service Account ------------------- #

apiVersion: v1

kind: ServiceAccount

metadata:

labels:

k8s-app: kubernetes-dashboard

name: kubernetes-dashboard

namespace: kube-system

---

# ------------------- Dashboard Role & Role Binding ------------------- #

kind: Role

apiVersion: rbac.authorization.k8s.io/v1

metadata:

name: kubernetes-dashboard-minimal

namespace: kube-system

rules:

# Allow Dashboard to create 'kubernetes-dashboard-key-holder' secret.

- apiGroups: [""]

resources: ["secrets"]

verbs: ["create"]

# Allow Dashboard to create 'kubernetes-dashboard-settings' config map.

- apiGroups: [""]

resources: ["configmaps"]

verbs: ["create"]

# Allow Dashboard to get, update and delete Dashboard exclusive secrets.

- apiGroups: [""]

resources: ["secrets"]

resourceNames: ["kubernetes-dashboard-key-holder", "kubernetes-dashboard-certs"]

verbs: ["get", "update", "delete"]

# Allow Dashboard to get and update 'kubernetes-dashboard-settings' config map.

- apiGroups: [""]

resources: ["configmaps"]

resourceNames: ["kubernetes-dashboard-settings"]

verbs: ["get", "update"]

# Allow Dashboard to get metrics from heapster.

- apiGroups: [""]

resources: ["services"]

resourceNames: ["heapster"]

verbs: ["proxy"]

- apiGroups: [""]

resources: ["services/proxy"]

resourceNames: ["heapster", "http:heapster:", "https:heapster:"]

verbs: ["get"]

---

apiVersion: rbac.authorization.k8s.io/v1

kind: RoleBinding

metadata:

name: kubernetes-dashboard-minimal

namespace: kube-system

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: Role

name: kubernetes-dashboard-minimal

subjects:

- kind: ServiceAccount

name: kubernetes-dashboard

namespace: kube-system

---

# ------------------- Dashboard Deployment ------------------- #

kind: Deployment

apiVersion: apps/v1beta2

metadata:

labels:

k8s-app: kubernetes-dashboard

name: kubernetes-dashboard

namespace: kube-system

spec:

replicas: 1

revisionHistoryLimit: 10

selector:

matchLabels:

k8s-app: kubernetes-dashboard

template:

metadata:

labels:

k8s-app: kubernetes-dashboard

spec:

containers:

- name: kubernetes-dashboard

image: registry.cn-hangzhou.aliyuncs.com/google_containers/kubernetes-dashboard-amd64:v1.10.0

ports:

- containerPort: 8443

protocol: TCP

args:

- --auto-generate-certificates

- --token-ttl=5400

# Uncomment the following line to manually specify Kubernetes API server Host

# If not specified, Dashboard will attempt to auto discover the API server and connect

# to it. Uncomment only if the default does not work.

# - --apiserver-host=http://my-address:port

volumeMounts:

- name: kubernetes-dashboard-certs

mountPath: /certs

# Create on-disk volume to store exec logs

- mountPath: /tmp

name: tmp-volume

livenessProbe:

httpGet:

scheme: HTTPS

path: /

port: 8443

initialDelaySeconds: 30

timeoutSeconds: 30

volumes:

- name: kubernetes-dashboard-certs

hostPath:

path: /certs

type: Directory

- name: tmp-volume

emptyDir: {}

serviceAccountName: kubernetes-dashboard

# Comment the following tolerations if Dashboard must not be deployed on master

tolerations:

- key: node-role.kubernetes.io/master

effect: NoSchedule

---

# ------------------- Dashboard Service ------------------- #

kind: Service

apiVersion: v1

metadata:

labels:

k8s-app: kubernetes-dashboard

name: kubernetes-dashboard

namespace: kube-system

spec:

ports:

- port: 443

targetPort: 8443

nodePort: 31234

selector:

k8s-app: kubernetes-dashboard

type: NodePort生成私钥和证书签名

openssl genrsa -des3 -passout pass:x -out dashboard.pass.key 2048

openssl rsa -passin pass:x -in dashboard.pass.key -out dashboard.key

rm dashboard.pass.key

openssl req -new -key dashboard.key -out dashboard.csr 一路回车即可生成SSL证书:

openssl x509 -req -sha256 -days 365 -in dashboard.csr -signkey dashboard.key -out dashboard.crt

证书全部在/certs注意路径会在deployment在使用到创建 dashboard用户权限

kind: ClusterRoleBinding

apiVersion: rbac.authorization.k8s.io/v1beta1

metadata:

name: admin

annotations:

rbac.authorization.kubernetes.io/autoupdate: "true"

roleRef:

kind: ClusterRole

name: cluster-admin

apiGroup: rbac.authorization.k8s.io

subjects:

- kind: ServiceAccount

name: admin

namespace: kube-system

---

apiVersion: v1

kind: ServiceAccount

metadata:

name: admin

namespace: kube-system

labels:

kubernetes.io/cluster-service: "true"

addonmanager.kubernetes.io/mode: Reconcile| 获取 token kubectl describe secret/$(kubectl get secret -nkube-system |grep admin|awk ‘{print $1}’) -nkube-system |